Monster Scale Summit

Curious how leading engineers tackle extreme scale challenges with data-intensive applications? Join Monster Scale Summit (free + virtual). It’s hosted by ScyllaDB, the monstrously fast and scalable database.

Agenda

Introduction

Before delving into this week’s tasks, it’s important to understand what you will implement. This week, you will implement a basic log-structured merge-tree (LSM tree).

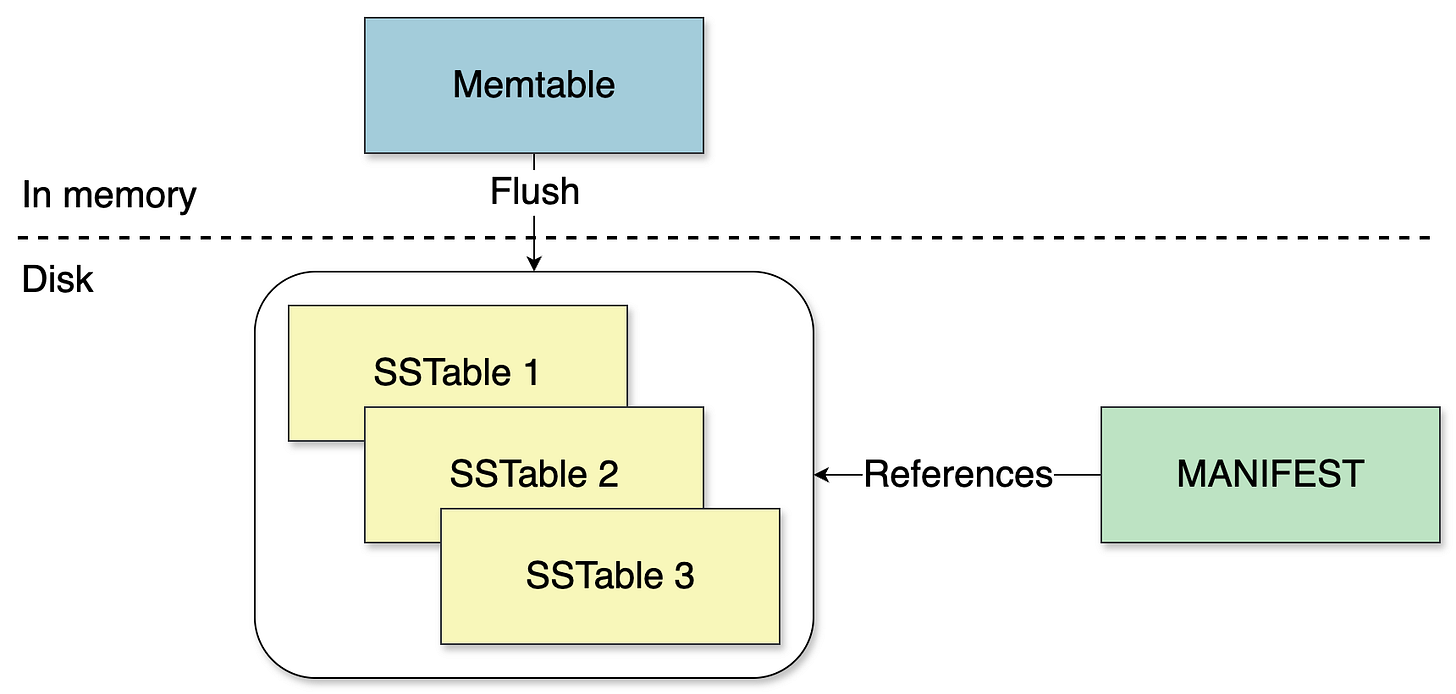

At its core, an LSM tree is a data structure that prioritizes write efficiency by trading off some read complexity. It buffers writes in memory and uses append-only files on disk, then rewrites data during compaction. It consists of two main components:

A mutable in-memory data structure called a memtable, used to store recent writes.

A set of immutable SSTables (Sorted String Table) stored on disk.

Regularly, the current memtable is snapshotted, its entries are sorted by key, and a new immutable SSTable file is written.

In addition, a MANIFEST file is an append-only list of SSTable filenames. It tells the engine which SSTable files exist and in which order to read them, newest to oldest.

Why LSM trees shine for write-heavy workloads:

Fast writes with sequential I/O: New updates are buffered in memory (memtable) and later written sequentially to disk during a flush (SSTable), which is faster than the random I/O patterns common with B-trees, for example.

Decouples writes from read optimization: Writes complete against the memtable, while compaction work runs later (you will tackle that in a future week).

Space and long-term efficiency: Compaction processes remove dead data and merge many small files into larger sorted files, which keeps space usage in check and sustains read performance over time.

For the memtable, you will start with a hashtable. In a future week, you will learn why a hashtable is not the most efficient data structure for an LSM tree, but it is a simple starting point.

For the SSTables, you will use JSON as the data format. Get comfortable with a JSON parser if you are not already.

Your Tasks

💬 If you want to share your progress, discuss solutions, or collaborate with other coders, join the community Discord server (#kv-store-engine channel):

Assumptions

This week’s implementation is single-threaded. You will revisit that assumption later.

Memtable

Implement a hashtable to store PUT requests (create or update). You can probably reuse a lot of code from Week 1.

Flush

When your memtable contains 2,000 entries:

Flush the memtable as a new immutable JSON SSTable file with keys sorted. The SSTable file is a JSON array of objects, each with two fields,

keyandvalue. Keys are unique within a file.For example, if your memtable contains the following entries:

a = hello b = world z = 42You need to create the following SSTable:

[ { “key”: “a”, “value”: “hello” }, { “key”: “b”, “value”: “world” }, { “key”: “z”, “value”: “42” } ]Use a counter for the filename prefix, for example

sst-1.json,sst-2.json,sst-3.json.After writing the new SSTable, append its filename to the MANIFEST (append only), then clear the memtable:

sst-1.json sst-2.json sst-3.json <- new

For now, the flush is a stop-the-world operation. While the file is being written, do not serve reads or writes. You will revisit that later.

Startup

Create an empty

MANIFESTfile if it doesn’t exist.Derive the next SSTable ID from the MANIFEST so you don't reuse the same filename.

GET

Update GET /{key}:

Check the memtable:

If found, return the corresponding value.

If not found, read the MANIFEST to list SSTable filenames:

Scan SSTables from newest to oldest (for example

sst-3.json, thensst-2.json, thensst-1.json). Use a simple linear scan inside each file for now. Stop at the first hit and return the corresponding value.If still not found, return

404 Not Found.

Client & Validation

There are no changes to the client you built in week 1. Run it against the same file (put.txt) to validate that your changes are correct.

[Optional] Negative Cache

Keep a small LRU cache of known-absent keys (negative cache) between the memtable and SSTables. This avoids repeated disk scans for hot misses: after the first miss, subsequent lookups are O(1).

Implementation details are up to you.

[Optional] MANIFEST Cache

Instead of parsing the MANIFEST file for each GET request, you can cache the content in-memory.

Wrap Up

That’s it for this week! You have built the first version of an LSM tree: a memtable in memory, SSTable files written by regular flushes, and a MANIFEST that lists those SSTables.

For now, durability isn’t guaranteed. Data already flushed to SSTables will be read after a restart, but anything still in the memtable during a crash is lost.

In two weeks, you will make sure that any request acknowledged to a client remains in your storage engine, even after a restart.

Further Notes

The flush trigger you used was pretty simple: once the memtable contains 2,000 entries. In real systems, flushes can be triggered by various factors, for example:

Some databases flush when the memtable reaches a target size in bytes, ensuring predictable memory usage.

A flush can also occur after a period of time has passed. This occurs because the database eventually needs to release commit log segments. For tables with very low write activity, this can sometimes lead to data resurrection scenarios. Here’s an old issue from the ScyllaDB codebase that illustrates this behavior.

Regarding the model, this series assumes a simple key–value one: every PUT stores the whole value, so a GET just finds the newest entry and returns it. If you need a richer model (e.g., rows with many fields or collections), writes are often partial (patches) rather than full replacements. Therefore, reads must reconstruct the result by scanning newest to oldest and merging changes until all required fields are found or a full-write record is encountered.

Last but not least, in this series, you implicitly rely on client-side ordering: the validation client issues requests sequentially. Production KV databases typically attach a sequence number or a logical timestamp to each write to handle out-of-order arrivals, merging, and reconciling results. Pure wall-clock timestamps are convenient but brittle; see Kyle Kingsbury’s notes on clock pitfalls for a deeper dive.

Sources

The Log-Structured Merge-Tree (LSM-Tree) // The original LSM tree whitepaper.

Log Structured Merge Tree - ScyllaDB // LSM tree definition from ScyllaDB technical glossary.

❤️ If you enjoyed this post, please hit the like button.