Monster Scale Summit

Curious how leading engineers tackle extreme scale challenges with data-intensive applications? Join Monster Scale Summit (free + virtual). It’s hosted by ScyllaDB, the monstrously fast and scalable database.

Agenda

Introduction

Over the past few weeks, you built an LSM tree and three main components: a memtable, SSTables, and a WAL that records the same operations you keep in the memtable.

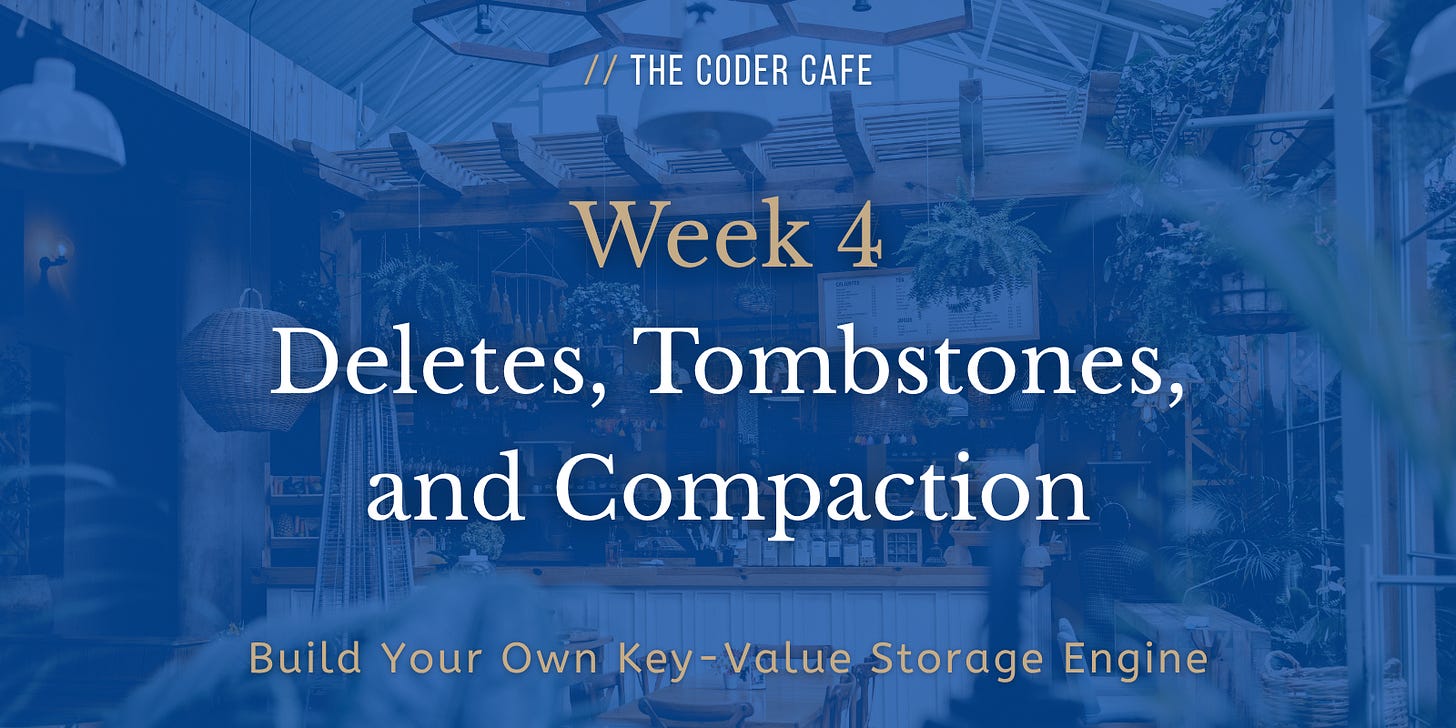

To prevent on-disk data from growing forever, you will implement compaction, a critical process in LSM trees. Compaction periodically merges SSTables to reclaim space and keep read performance predictable. For example, if key 1234 exists in every SSTable on disk:

Compaction drops duplicates and keeps only the newest record: 1234=baz.

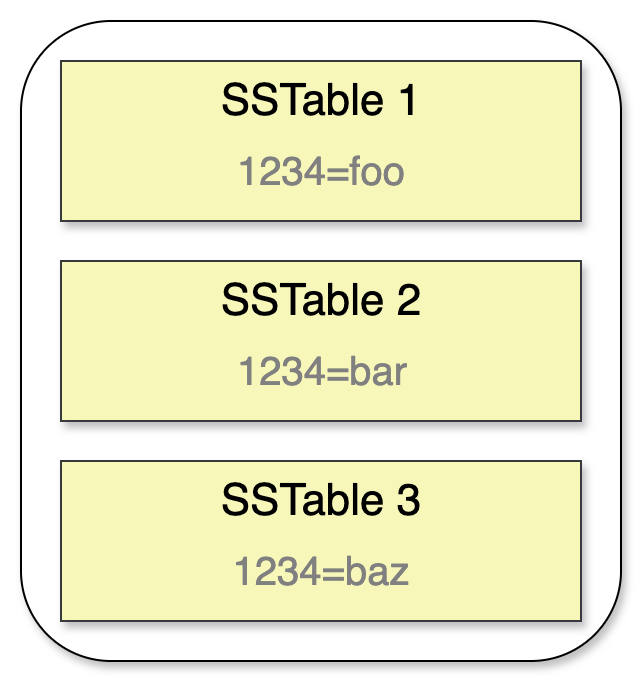

In addition, you will implement a DELETE endpoint. Handling deletes in an LSM tree isn’t straightforward at all: SSTables are immutable. To preserve the append-only nature of LSM trees, deletions are written as tombstones: markers indicating a key was logically deleted. You write it to the WAL, keep it in the memtable, and propagate it during flush.

How should compaction work in the presence of tombstones? Suppose you have the following SSTables on disk: the key exists in SST-1, doesn’t exist in SST-2, exists in SST-3, and is deleted at SST-4:

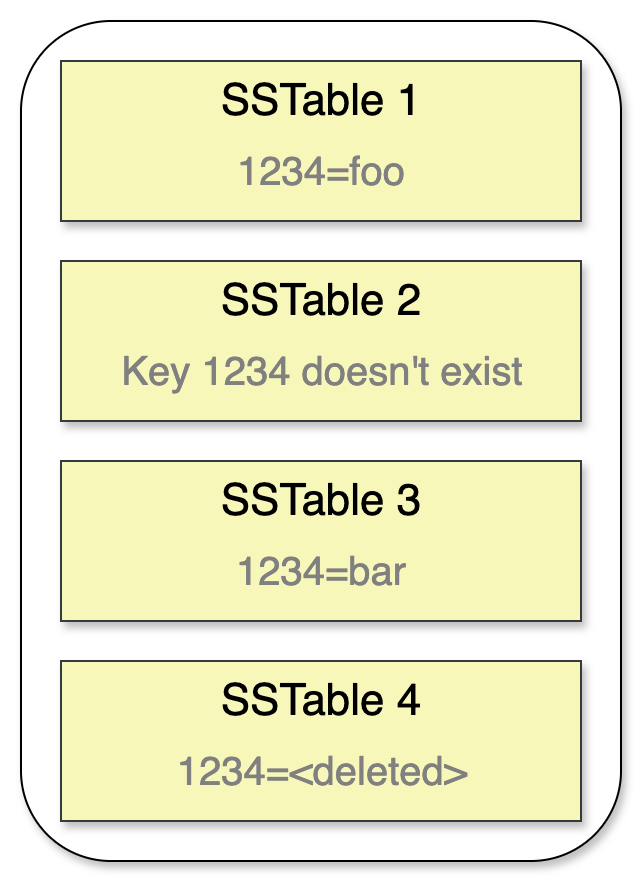

If the key doesn’t exist in the memtable, the current state for 1234 is deleted. Now, imagine that during compaction, you merge SST-3 and SST-4. As the key is marked as deleted in the newest SSTable, you may decide to drop the tombstone, as it hides the key in SST-3:

Now, GET 1234 would do:

Key doesn’t exist in the memtable → Continue.

Key doesn’t exist in SST-5 → Continue.

Key doesn’t exist in SST-2 → Continue.

Key exists in SST-1 → Return

foo(instead of404 Not Found).

The fundamental rule is the following: during compaction, a tombstone can be evicted only after all data it shadows no longer exist on disk. Otherwise, dropping a tombstone too early can make an old value reappear. This is known as data resurrection: a key that “comes back to life” after a deletion.

Your Tasks

💬 If you want to share your progress, discuss solutions, or collaborate with other coders, join the community Discord server (#kv-store-engine channel):

Assumptions

Flush and compaction should be single-threaded and stop-the-world operations: do not serve client requests until the operations complete.

DELETE Endpoint

Add DELETE /{key}:

Append a tombstone to the WAL file, with

op=delete:

{”op”:”delete”,”key”:”k”}fsyncthe WAL.Update the memtable: Do not remove the key directly; mark it deleted with a tombstone.

Acknowledge the request.

Flush

During flush, carry tombstones into the new SSTable using a new deleted field. For example:

[

...

{ “key”: “foo”, “deleted”: true },

...

]The keys must remain sorted.

Compaction

The goals of the compaction process for this week are the following:

For each key, keep only the newest record.

Drop records hidden by newer versions. This is where merging happens: the newest record wins, and older versions are evicted.

Drop tombstones when no older value remains.

The compaction trigger is: every 10,000 update requests (PUT and DELETE, not GET), compact all SSTables.

Algorithm (k-way merge using a min-heap on key):

Open an iterator for each SSTable file known by the MANIFEST.

Push each iterator’s current record into a min-heap with the following comparator:

Primary:

key.Tie-break (equal

key): Newest SSTable first based on MANIFEST order (to make sure an old value doesn’t win).

While the heap is not empty:

Pop the smallest key

k(this first pop is the newest version ofkdue to the tie-break).Drain all other heap entries whose key is

kand discard them (older values).For the record you picked:

If it’s a tombstone, emit nothing for

k.Otherwise, emit the value for

k.

Advance only the iterators you drained for

kand push their next records into the heap.

Stream emitted records (sorted) into new SSTables. Remember: the max entries in an SSTable should remain 2,000.

fsynceach new SSTable file, thenfsyncits parent directory.Update the MANIFEST atomically (see week 3).

Remove the old SSTable files.

GET

Check the memtable:

If the key is marked as deleted, return

404 Not Found.Else, return the value.

Scan SSTables from newest to oldest, given the MANIFEST order (same as before).

For the first record with the requested key:

If

deleted=true, return404 Not Found.Else, return the value.

If the key isn’t found, return

404 Not Found.

Startup

When replaying the WAL, make sure to take into account tombstone values (

op=delete).

Client & Validation

Update your client to handle

DELETE klines → Send aDELETErequest to/k.Download and run your client against a new file containing

DELETErequests: put-delete.txt.NOTE: Refer to week 1 if you need to generate your own file with the number of lines you want.

Wrap Up

That’s it for this week! Your storage engine now supports deletes and a compaction mechanism that prevents unbounded growth.

The Coder Cafe will take a break for two weeks. On January 7th, you will continue exploring LSM trees and cover leveling. In your current implementation, a miss still scans all SSTables; therefore, you will also add key range partitioning to limit the number of SSTables that need to be checked during a lookup.

See you next year!

Further Notes

The compaction trigger you used was simple: every 10,000 PUT or DELETE requests. In real systems, compaction is usually driven by factors such as too many SSTable files, space pressure, or high read amplification.

Also, many systems add safeguards to keep compaction controlled and resource-efficient. For example, a common one is bounded fan-in (merging only a small, fixed number of SSTables per batch), so the engine never opens every file at once. Others track each SSTable’s first and last key to select only overlapping candidates, hence avoiding unrelated files.

Taking a step back, it’s interesting to note that the core LSM idea—append-only writes with regular compaction—shows up in many systems, even outside pure LSM trees. For example:

Lucene: Immutable segments are created and later merged in the background, an LSM-like pattern, even though it isn’t an LSM tree per se.

Memcached Extstore: Flushes values to free RAM, but keeps the hashtable, keys, and storage pointers in memory. It later compacts the data.

Kafka: Rewrites segments to keep the latest value per key and drop older versions, which is conceptually similar to SSTable compaction.

Also, we briefly introduced the concept of key resurrection in the introduction. You should be aware that this is a common challenge with LSM trees. In real-world conditions, crashes, slow WAL truncation, and complex compaction can allow an old value to be replayed during recovery after its tombstone has been removed, leading to key resurrection. Here are two great references that delve more into this kind of problem:

Preventing Data Resurrection with Repair Based Tombstone Garbage Collection

Repair Time Requirements to Prevent Data Resurrection in Cassandra & Scylla

Another excellent reference is Acheron: Persisting Tombstones in LSM Engines. It shows how standard LSM compaction can leave tombstones stuck for long periods, so “deleted" data may still linger in lower levels and complicate compliance requirements such as GDPR/CCPA compliance. The paper introduces delete-aware techniques that prioritize pushing tombstones down the tree to make deletions persist more predictably.

Lastly, you can explore the RUM conjecture. Structurally, it’s similar to the CAP theorem: “three things, pick two”. In short, you can make a database excel at two of: reads, updates (insert/change/delete), and memory/space, but not all three at once. Make any two really good and the third gets worse; that’s an unavoidable trade-off. This helps explain why, for example, LSM trees optimized for fast updates and good space efficiency pay a cost in read performance due to read amplification.

That trade-off shows up in the design of the compaction process you implemented this week: you trade space and significant I/O for simplicity by compacting everything in one shot. This is fine for the example, but with 500GB of SSTables, you may need roughly another 500GB of free space during the merge in the worst case.

❤️ If you enjoyed this post, please hit the like button.