Monster Scale Summit

Curious how leading engineers tackle extreme scale challenges with data-intensive applications? Join Monster Scale Summit (free + virtual). It’s hosted by ScyllaDB, the monstrously fast and scalable database.

I’ll also give a talk there, so feel free to join!

Agenda

Introduction

Last week, you implemented deletion and compaction, making sure the LSM tree wouldn’t grow indefinitely.

Still, there’s a weak spot: in the worst-case scenario (e.g., on a key miss), a single read has to scan all SSTables. To address this, you will implement leveling, a core idea in LSM trees.

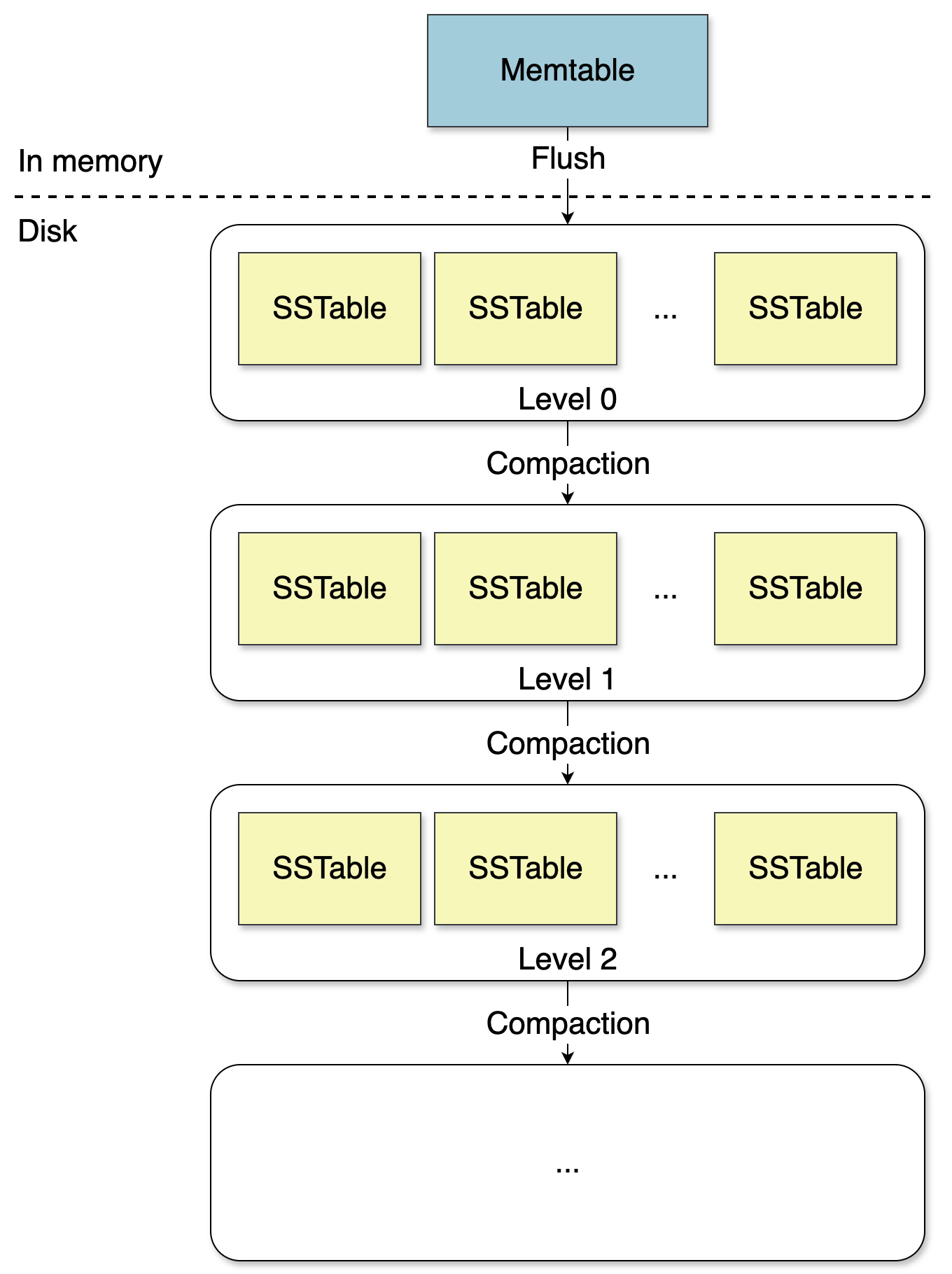

Instead of a single flat list of SSTables, leveling stores data across multiple levels: L0, L1, L2, etc.

L0gets compacted toL1and makes space for future memtable flushes.L1gets compacted toL2and makes space forL0compaction.L2gets compacted toL3and makes space forL1compaction.…

Ln-1gets compacted toLnand makes space forLn-2compaction.

This process is called level compaction.

Something important to understand: L0 is slightly different from all the other levels. L0 is created during memtable flushes. If a key already exists at L0 and also in the memtable, the next flush can write that key again to a new L0 file. In other words, L0 can have overlapping keys.

For all the other levels (L1 to Ln), that’s not the case. They are created by compaction, which removes duplicates and produces non-overlapping key ranges. In this week’s simplified design, an Li-1 to Li compaction takes all SSTables from Li-1 and Li, performs a k-way merge, then rewrites Li fully. As a result, each key appears at most once per level from L1 downward.

What’s the consequence of non-overlapping keys? You can improve lookups using a simple range-to-file mapping, for example:

Keys from

aaatobacare stored in this SSTable.Keys from

bactocadare stored in this SSTable.etc.

With this setup, a read checks only one SSTable per level from L1 to Ln. L0 is the exception due to overlaps, so a read may still need to scan all L0 SSTables.

Your Tasks

💬 If you want to share your progress, discuss solutions, or collaborate with other coders, join the community Discord server (#kv-store-engine channel):

Leveling

Limit the number of levels to two:

L0, which may contain overlapping keys.L1, no overlapping keys.

Create a folder for each level:

/l0, and/l1.Keep one global

MANIFESTfile at the root.

Manifest Layout

You will create a MANIFEST layout for both L0 and L1:

L0remains a simple list of SSTables.L1allows key-range partitioning.

For example:

[L0]

sst-11.json

sst-12.json

sst-13.json

[L1]

aaa-karate: sst-1.json

karate-leo: sst-2.json

leo-yaml: sst-3.jsonThis MANIFEST indicates:

L0is composed of three SSTables:/l0/sst-11.json./l0/sst-12.json./l0/sst-13.json.

L1:Keys between

aaa(included) andkarate(excluded) live in/l1/sst-1.json.Keys between

karate(included) andleo(excluded) live in/l1/sst-2.json.Keys between

leo(included) andyaml(excluded) live in/l1/sst-3.json.

Compaction

The main goal of the compaction process is to compact both L0 and L1. At the end, you should merge all the data from L0 and L1 into L1. L0 will be left empty.

When L0 reaches five full SSTable files (2,000 entries each), run an L0 → L1 compaction:

Open iterators on all

L0andL1SSTables.Apply the k-way merge algorithm:

Comparator:

Primary:

key.Tie-break (equal

key):Prefer

L0overL1.At

L0, prefer the newest SSTable.

Version order: any record from

L0is newer than records fromL1. WithinL0, newer files win (same as week 4).Keep at most one record per key (newest wins).

Tombstones: because

L1is the bottom level, drop a tombstone if no older value for that key remains in the merge result.Create new L1 SSTables with at most 2,000 entries.

When naming new L1 files, make sure they are unique. For example, if

/l1containssst-1.jsonandsst-2.json, the first SSTable file created should besst-3.json.

Publish atomically:

fsynceach newL1filefsyncthe/l1directory.Update the

MANIFESTatomically.fsynctheMANIFESTfile.fsyncthe root directory (the directory containing theMANIFESTfile and/l0and/l1folders).

Clean up:

Delete obsolete L1 files, then

fsync/l1.Delete all files in

/l0, thenfsync/l0.

Flush (memtable to L0)

The logic is unchanged from previous weeks. The only difference is that flush writes to L0 and updates the MANIFEST file in the [L0] section.

GET

Check the memtable.

If not found, scan all

L0files newest to oldest using section[L0]of theMANIFEST.If not found at

L0:Use the section

[L1]of theMANIFESTto choose the one shard that contains the key’s range, then read only that L1 file.Return the value if found; otherwise, return

404 Not Found.

Client & Validation

There are no changes to the client. Run it against the same file (put-delete.txt) to validate that your changes are correct.

[Optional] Configurable Number of Levels

Introducing leveling has a fundamental impact on deletions. With a single level, compaction sees all versions of every key at once, so a tombstone can be dropped as soon as it has “killed“ every older record for that key. Yet, the rule we mentioned last week holds true: a tombstone can be evicted only after all data it shadows no longer exist on disk.

With multiple levels, compaction must propagate tombstones downward. It’s only at the bottommost level Ln that tombstones can be dropped, because only there you can prove they no longer shadow any other records.

As an optional task, make the number of levels configurable: L0, L1, …, Ln:

Define a size ratio so each level has a target size larger than the previous one.

Keep one directory per level:

/l0,/l1, …,/ln.Keep a single global

MANIFEST.When a level reaches its max number of SSTables (derived from the size ratio), compact that level into the next.

Only drop tombstones at the bottommost level

Ln. At any intermediate levelLiwith0 ≤ i < n, propagate the tombstone downward during compaction.

[Optional] SCAN

Implement GET /scan?start={start}&end={end}:

Return all keys between

start(included) andend(excluded).Use put-delete-scan.txt to validate that your changes are correct. It introduces the

SCANkeyword. For example:SCAN a-c aaa,bbb,bdxThis line means: between

a(included) andc(excluded), the keys areaaa,bbb,bdx(the output will always be sorted)

NOTE: If this route conflicts with

GET /{key}, rename the single-key route toGET /keys/{key}.

Wrap Up

That’s it for this week! Your LSM tree is taking shape. You implemented leveling, a key LSM design idea, and refined compaction so reads are tighter and storage stays under control.

In two weeks, you will revisit the week 2 choice of JSON for SSTables. You will switch to block-based SSTables to reduce parsing and I/O overhead and add indexing within each SSTable.

Further Notes

We mentioned that, because of key overlaps, a read may still need to scan all L0 SSTables (e.g., key miss). This is the main reason why L0 is typically kept small. In general, each level is larger than the one above it by a fixed size ratio (e.g., 10×). Some databases even use less static mechanisms. For instance, RocksDB relies on Dynamic Leveled Compaction, where the size of each level is automatically adjusted based on the size of the oldest (last) level, eliminating the need to define each level’s size statically.

Regarding compaction, you should know that in real-world databases, it isn’t done in batch mode across all data. Let’s understand why.

Suppose you have four levels and a layout like this for one key:

The key exists at L3.

The key doesn’t exist at L2.

The key is updated at L1.

A tombstone is placed at L0.

You can’t compact L0 with L1/L2/L3 in one shot; that would mean checking every SSTable against every level.

What happens in reality is that compaction is a promotion process. In our example, the tombstone at L0 is promoted to L1. Implementations ensure that it either (a) is compacted together with the L1 SSTable it shadows, or (b) waits until that L1 data is promoted to L2. The same rule repeats level by level, until the tombstone reaches L3 and finally removes the shadowed value.

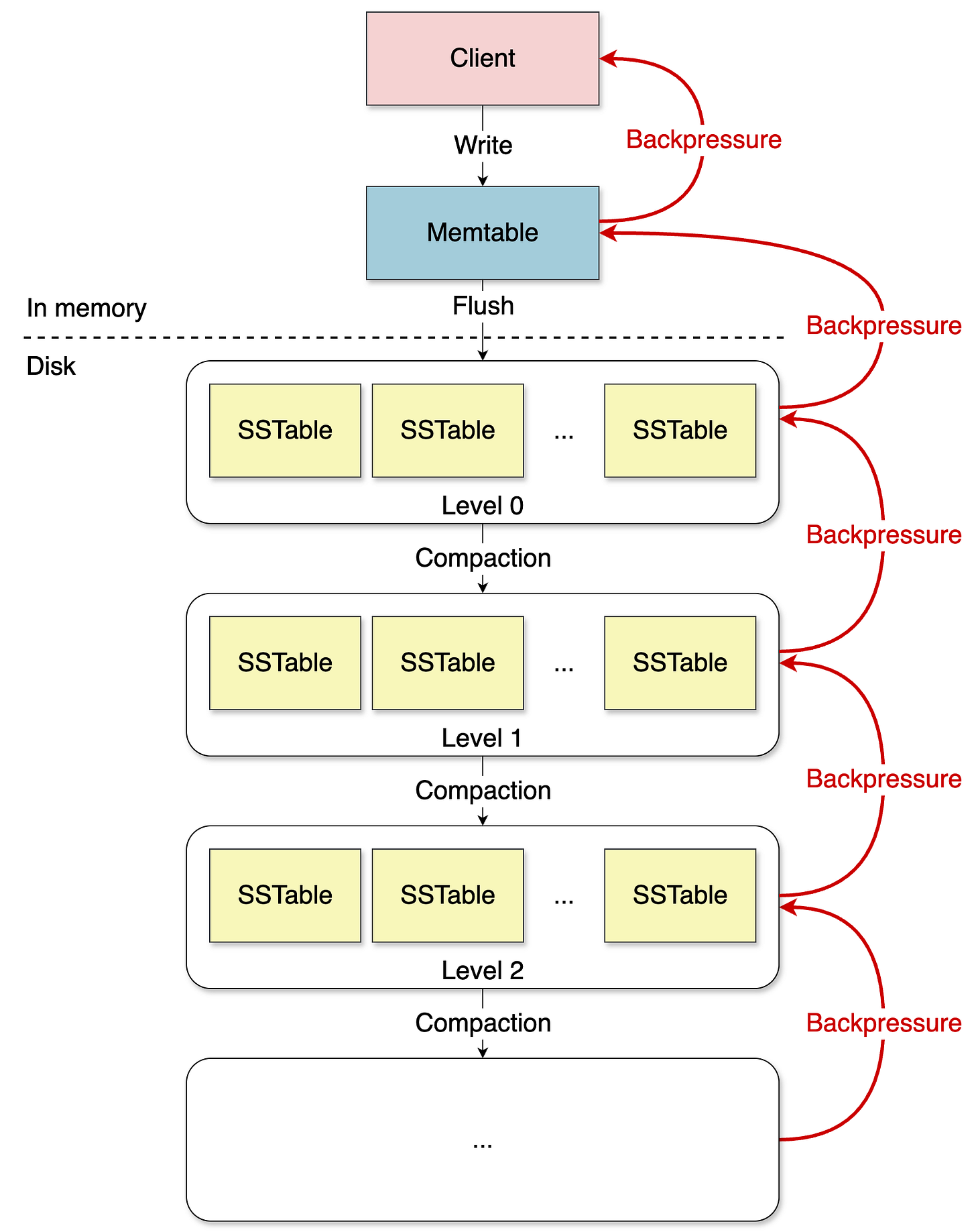

Meanwhile, it’s essential to understand that compaction is crucial in LSM trees. Let’s take some perspective to understand the reason. An LSM tree buffers writes in a memtable and flushes to L0. Compaction merges SSTables across levels to control read amplification. If compaction falls behind, L0 files accumulate, flushes slow down (or stall at file-count thresholds), write latency climbs, and in the worst case, you can observe write pauses. Not because the memtable is “locked,” but because the engine can’t safely create more L0 files until compaction catches up.

This is one of the reasons why the RUM conjecture we introduced last week is important.

If you compact too eagerly, you burn a lot of disk I/O and lose the LSM’s write advantage.

If you compact too lazily, you incur a penalty on your read path.

If you compact everything all the time, you incur a space-amplification penalty during compaction roughly equal to the working set size.

Because compaction is so important, most key-value stores support parallel compactions across levels (except L0 → L1, which isn’t parallelized due to overlapping key ranges in L0).

You should also be aware that ongoing research keeps improving compaction. For example, the SILK: Preventing Latency Spikes in LSM Key-Value Stores paper analyzes why LSM systems can exhibit high tail latency. The main reason is that limited I/O bandwidth causes interference between client writes, flushes, and compactions. The key takeaway is that not all internal operations are equal. The paper explores solutions such as

Bandwidth awareness: Monitor client I/O and allocate the leftover to internal work dynamically instead of static configuration.

Prioritization: Give priority to operations near the top of the tree (flushes and L0 → L1 compaction). Slowdowns there create backpressure that impacts tail latency more than work at deeper levels.

Last but not least, what you implemented this week is called level compaction. Other strategies like tiered compaction exist, which merge SSTables based on their size and count rather than fixed levels. You can explore this great resource from Mark Callaghan, which dives deeper into the design trade-offs and performance characteristics of different compaction strategies in LSM trees.

❤️ If you enjoyed this post, please hit the like button.

The choice to stick with JSON for SSTables early on makes sense for prototyping, but I'm curious how much the parsing overhead affects compaction when dealing with larger datasets. In production, most LSM implementations use binary formats precisely because decoding JSON on every k-way merge becomes a bottleneck. Excited to see the switch to block-based SSTables, that usualy unlocks way more optmization potential for both reads and compaction throughput.